Android XR Gemini Integration Explained: How Google’s AI Works in XR

Android XR Gemini integration represents Google’s bet on a future where your AI assistant doesn’t just live in your phone. It sees what you see, hears what you hear, and helps you navigate the world hands-free. Google describes Android XR as the first Android platform built in the Gemini era. It combines extended reality hardware with Google’s flagship AI to create something genuinely different from what’s come before.

What Makes Gemini Central to Android XR

At its core, Android XR Gemini integration pairs smart glasses and headsets with Gemini so they can see and hear what you do. The system understands your context and remembers what’s important to you. This isn’t just voice commands bolted onto XR hardware. It’s a fundamental rethinking of how AI assistants work.

The integration works across two main device types: headsets like Samsung’s Galaxy XR and upcoming smart glasses from partners including Gentle Monster and Warby Parker. Both leverage Gemini, but in different ways depending on the hardware.

Real-World Use Cases That Actually Make Sense

Google has demonstrated several practical applications that show how Gemini integration works in daily scenarios. During a demo, the system provided real-time translation by automatically detecting language and overlaying the translation directly on your view of the world. Someone speaking Farsi? The glasses translate it to English right in your field of vision. Switch to Hindi mid-conversation? Gemini adapts without you touching a single setting.

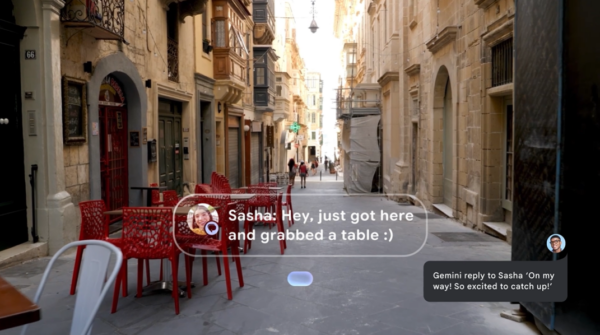

Navigation gets the same treatment. The system handles turn-by-turn directions through natural conversation. You can message friends, make appointments, and take photos just by talking. Ask for restaurant recommendations while walking down the street, and Gemini overlays Google Maps navigation to guide you there.

The glasses version particularly shines for quick access to information. They provide helpful information right when you need it. You can get directions, translations, and message summaries in your line of sight or through built-in speakers without reaching for your phone.

How It Works on Headsets vs. Glasses

On headsets like Samsung’s Galaxy XR you can have conversations with Gemini about what you’re seeing or use it to control your device. The system understands your intent whether you’re planning something, researching topics, or working through tasks.

In Google Maps, Gemini functions as a personal tour guide and offers suggestions as you explore 3D city models. On YouTube, you can ask Gemini to find specific content or explain what you’re watching. The Circle to Search feature lets you draw circles around real-world objects in passthrough mode to instantly get information. This works because Gemini can process what the cameras see.

Smart glasses take a different approach. Google is developing two types. AI glasses designed for screen-free assistance use speakers, microphones, and cameras. Display AI glasses add an in-lens display showing information like navigation or translation captions. Both types let you chat naturally with Gemini, but the display version gives you visual feedback without blocking your view of the real world.

The Technical Side: How Developers Can Use It

For developers, the Android XR Gemini integration offers access through the Gemini Live API via Firebase AI Logic. This handles both audio input and output seamlessly. The approach differs from traditional text-to-speech and speech recognition systems. The Gemini Live API manages conversational flow in a more natural way.

The platform also supports agentic experiences and allows apps to create more complex interactions. However, developers should note that the Gemini Live API requires a persistent internet connection and has limitations on concurrent connections. This matters for deployment at scale.

Privacy and Real-World Testing

Google’s been careful about the privacy implications of AI-powered cameras on your face. The company began gathering feedback on prototypes with trusted testers to build a truly assistive product that respects privacy for you and those around you.

This testing phase matters because smart glasses raise legitimate concerns about recording and surveillance that previous products like Google Glass never truly addressed. The Android XR approach seems designed to learn from those mistakes before a wide release.

What’s Coming and When

Samsung’s Galaxy XR headset recently gained new features. Likeness creates a realistic digital representation for video calls, and PC Connect enables streaming games. Travel mode provides for a stable viewing on airplanes. The company is also developing smart glasses codenamed Haean, expected in 2026.

Google’s partnership strategy extends beyond Samsung. Besides Gentle Monster and Warby Parker, the company announced plans to work with Kering Eyewear. The goal is creating stylish glasses people actually want to wear all day, instead of flashy tech demos that scream prototype.

XREAL revealed Project Aura, described as the first wired XR glasses in this category. It includes a 70-degree field of view and optical see-through technology. These devices layer digital content directly onto your physical view and give you a massive private canvas for multiple windows. This proves useful for taking your workspace or entertainment with you without blocking your surroundings.

The Bigger Picture

Android XR represents Google’s attempt to define spatial computing for the Android ecosystem. The platform enables automatic spatialization of traditional 2D apps so developers don’t have to rebuild everything from scratch. The open platform approach contrasts with Apple’s Vision Pro strategy and could attract more developers.

The Android XR Gemini integration gives the platform something genuinely differentiated: an AI assistant that’s contextually aware of your physical environment rather than just your digital one. Commercial availability is ramping up throughout 2025. The core concept represents a meaningful shift in how we might interact with computers in the years ahead.

User forum

0 messages